| Sep 18, 2025 | One paper accepted to NeurIPS 2025. |

| Jun 26, 2025 | One paper accepted to ICCV 2025. |

| Feb 26, 2025 | Two papers accepted to CVPR 2025 (both as highlight). |

| Jan 23, 2025 | One paper accepted to ICLR 2025. |

| Sep 26, 2024 | One paper accepted to NeurIPS 2024. |

| Jul 04, 2024 | Co-organizing OOD-CV workshop at ECCV 2024. Call for papers at ood-cv.org. |

| Jul 01, 2024 | Two papers accepted to ECCV 2024. |

| Jun 10, 2024 | I will present our Feint6K dataset at WINVU @ CVPR 2024. |

| Feb 28, 2024 | One paper accepted to IEEE TMM. |

see all publications here

-

SpatialReasoner: Towards Explicit and Generalizable 3D Spatial Reasoning

In Advances in Neural Information Processing Systems , 2025

3D Vision Vision-Lanugage We introduce SpatialReasoner, a novel large vision-language model (LVLM) that address 3D spatial reasoning with explicit 3D representations shared between stages – 3D perception, computation, and reasoning. Explicit 3D representations provide a coherent interface that supports advanced 3D spatial reasoning and enable us to study the factual errors made by LVLMs.

-

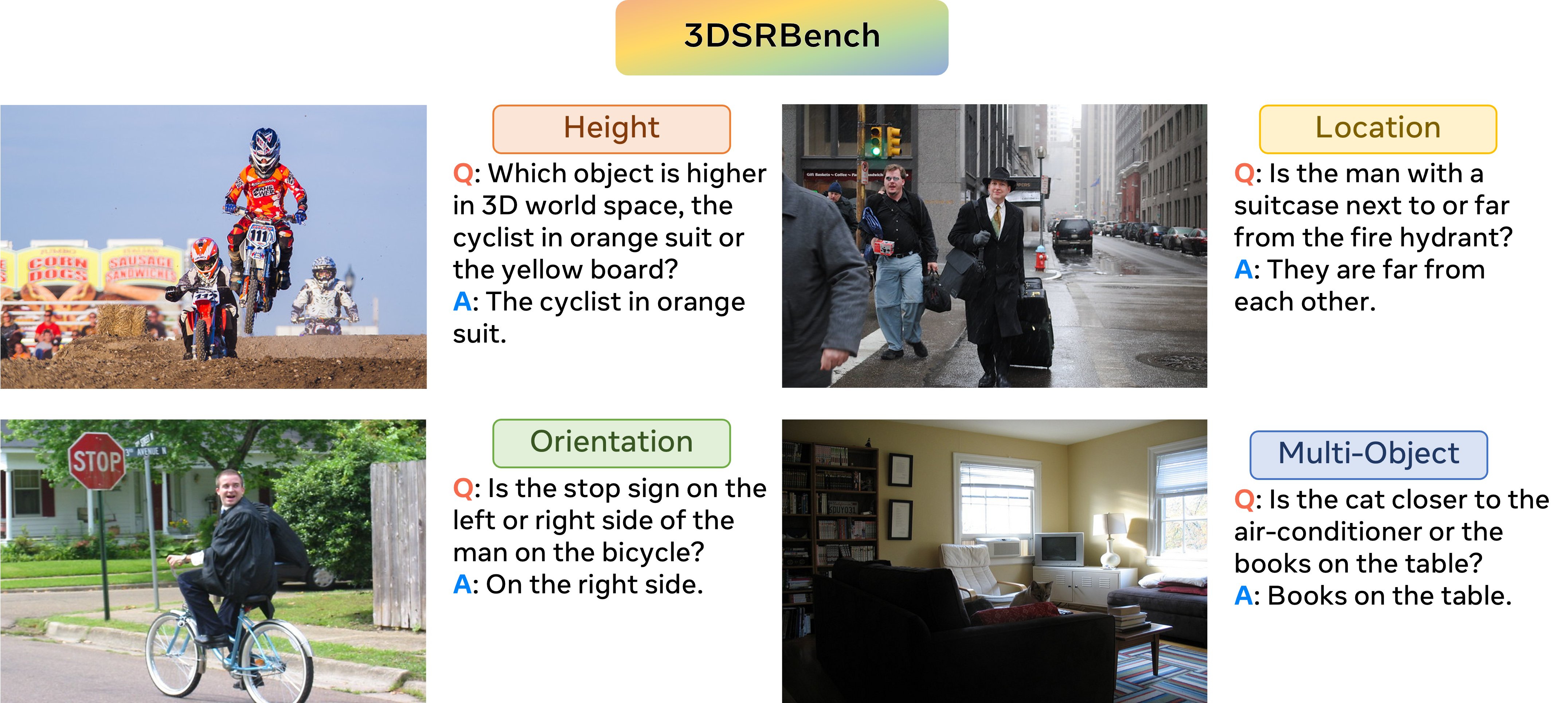

3DSRBench: A Comprehensive 3D Spatial Reasoning Benchmark

In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) , Oct 2025

3D Vision Vision-Lanugage We present 3DSRBench, a comprehensive 3D spatial reasoning benchmark.

-

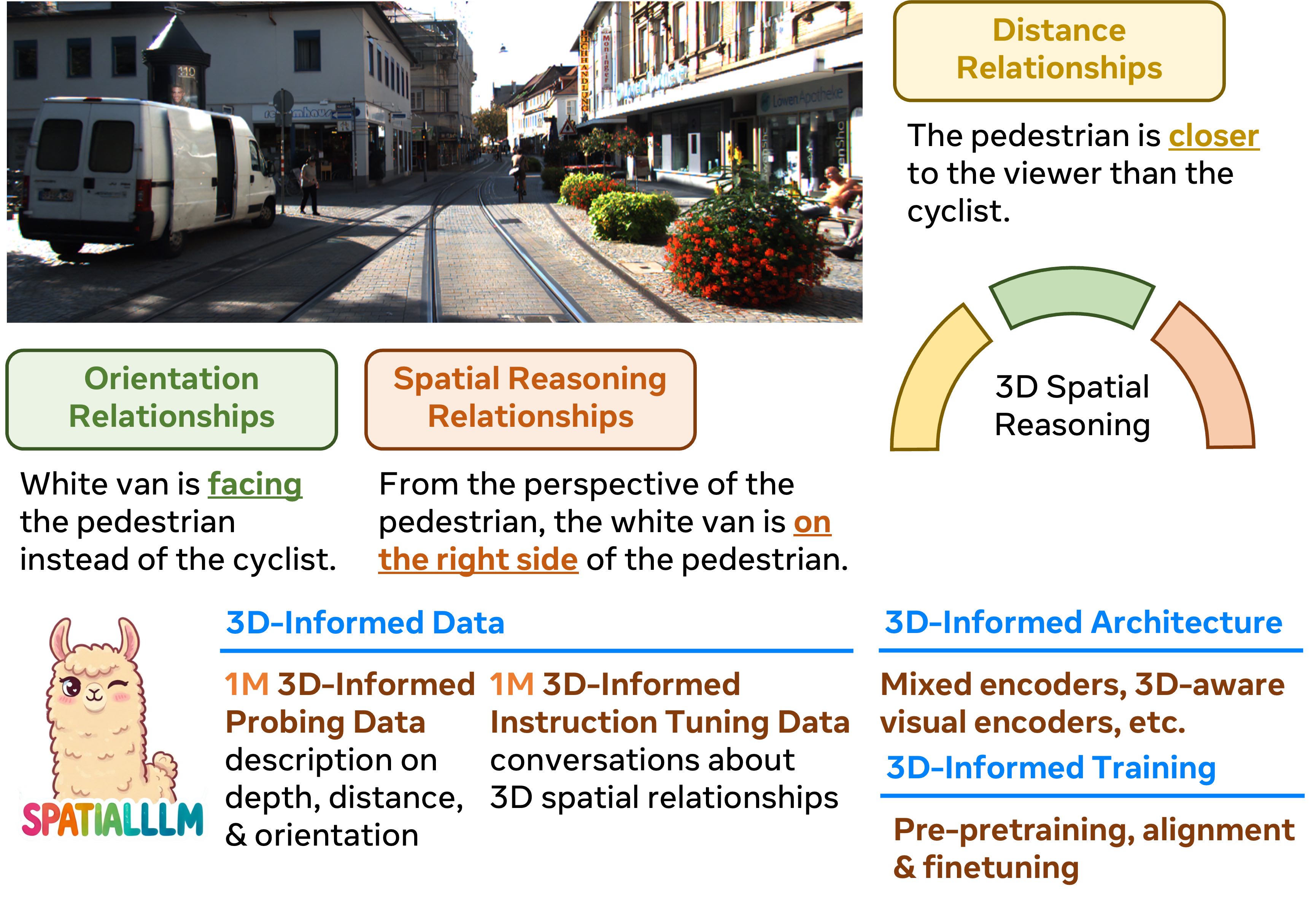

SpatialLLM: A Compound 3D-Informed Design towards Spatially-Intelligent Large Multimodal Models

In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition , Oct 2025

(Highlight, 3.0%)

3D Vision Vision-Lanugage We systematically study the impact of 3D-informed data, architecture, and training setups and present SpatialLLM, an LMM with advanced 3D spatial reasoning abilities.

-

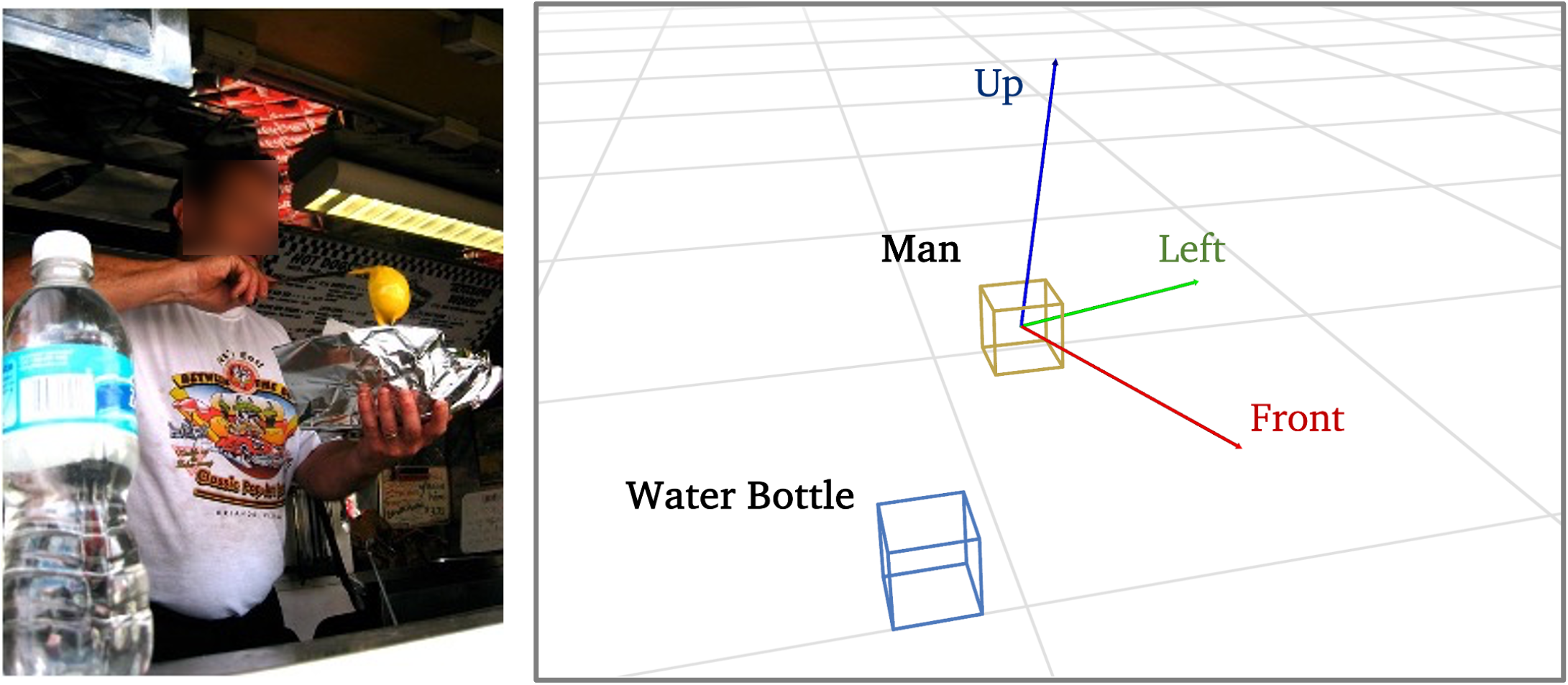

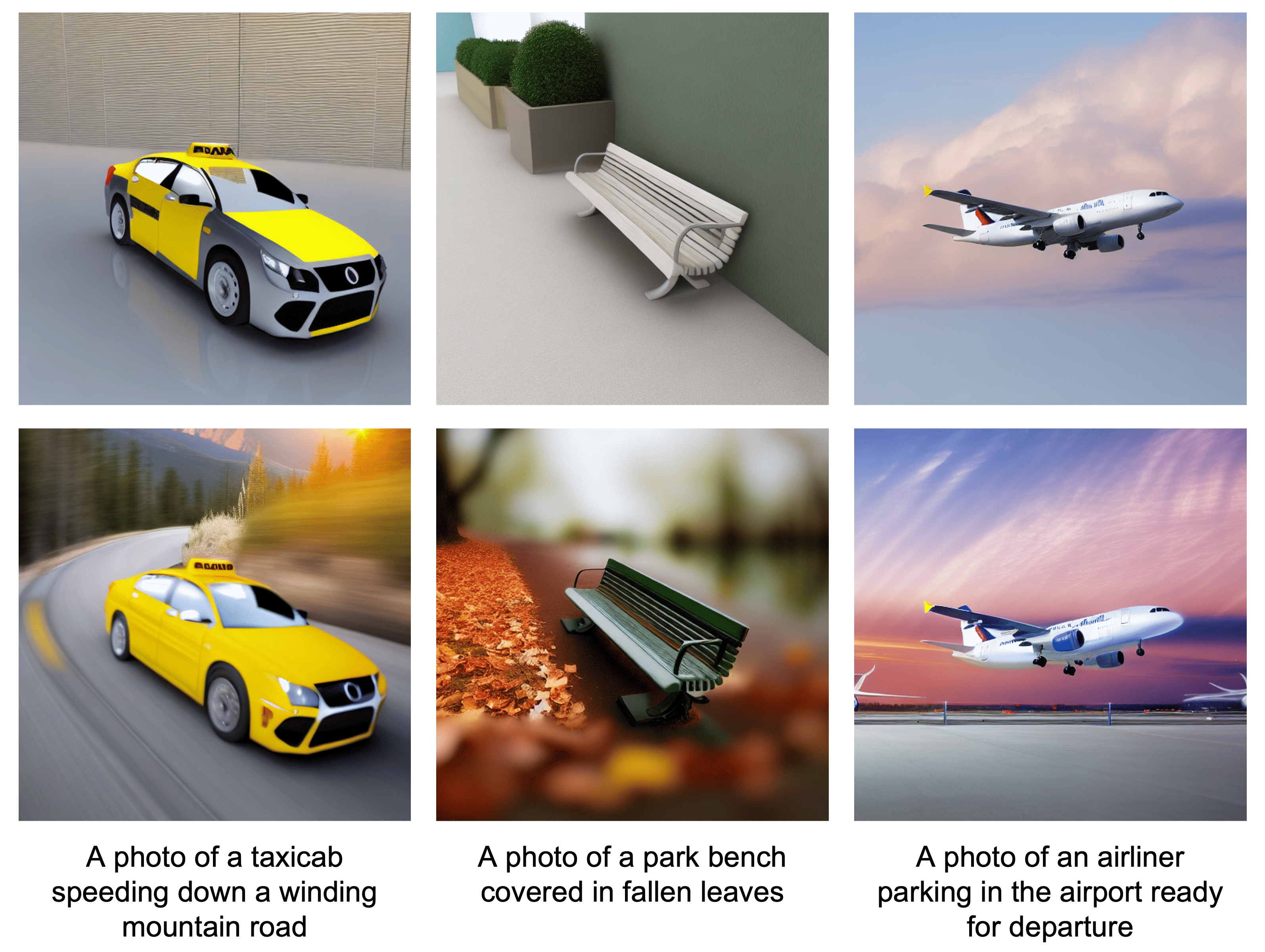

ImageNet3D: Towards General-Purpose Object-Level 3D Understanding

In Advances in Neural Information Processing Systems , Oct 2024

We present ImageNet3D, a large dataset for general-purpose object-level 3D understanding.

-

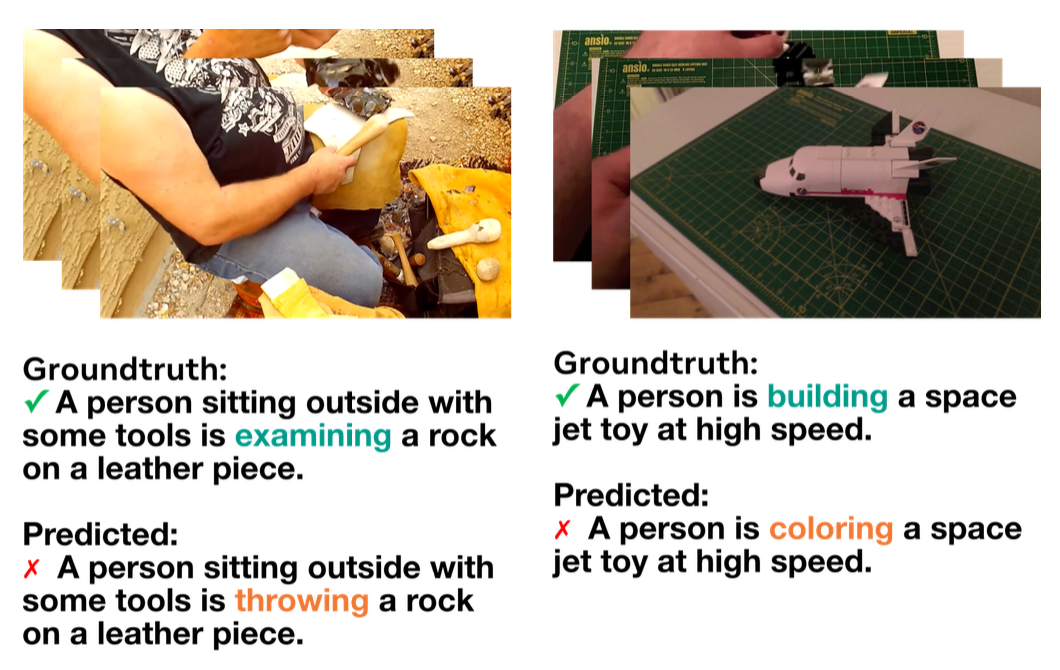

Rethinking Video-Text Understanding: Retrieval from Counterfactually Augmented Data

In European Conference on Computer Vision , Oct 2024

(Strong Double Blind)

We propose a novel task, retrieval from counterfacually augmented data, and a dataset, Feint6K, for video-text understanding.

-

Generating Images with 3D Annotations Using Diffusion Models

Wufei Ma*,

Qihao Liu* ,

Jiahao Wang* ,

Angtian Wang,

Xiaoding Yuan , Yi Zhang,

Zihao Xiao,

Guofeng Zhang , Beijia Lu, Ruxiao Duan, Yongrui Qi,

Adam Kortylewski ,

Yaoyao Liu, and

Alan Yuille In The Twelfth International Conference on Learning Representations , Oct 2024

(Spotlight, 5%)

We propose 3D-DST that generates synthetic data with 3D groundtruth by incorporating 3D geomeotry control into diffusion models. With our diverse prompt generation, we effectively improve both in-distribution (ID) and out-of-distribution (OOD) performance for various 2D and 3D vision tasks.

Reality Labs,

Reality Labs,  Research Asia,

Research Asia,  Frontier AI & Robotics (FAR) and AWS CV Science,

Frontier AI & Robotics (FAR) and AWS CV Science,  Research, and collaborated with many exceptional researchers.

Research, and collaborated with many exceptional researchers.